AdaBoost), or combining models trained in different parts of the dataset. In machine learning it has been proven the good performance of combining different models to tackle a problem (i.e. The idea behind Dropout is to approximate an exponential number of models to combine them and predict the output. We have the function z = f(x,y) = 2x-y and we want to calculate both of its partial derivatives \frac = -1.ĭropout is an incredibly popular method to combat overfitting in neural networks. For instance, you may want to process or keep track of the gradients to understand the Graph computations or you may want to calculate the gradients with respect to only some variables and not all of them. This decomposition of the function minimize can be useful in some cases. GradientDescentOptimizer (learning_rate = 0.01 ) variable_scope ( "optimization" ) as scope: For example, this is how the derivative of f(x)=sin(x) looks like (python/ops/math_grad.py): In fact, if you want to implement a new operation it has to inherit from Decop and its gradient has to be “registered” (RegisterGradient). Gradients of common mathematical operations are included in Tensorflow so they can be directly applied during the reverse mode automatic differentiation process. The reverse mode is a bit harder to see probably because of the notation introduced by the Wikipedia but someone made a simple decomposition easier to understand. In the Automatic differentiation Wikipedia page there are a couple of step-by-step examples of forward and reverse mode quite easy to follow. Tensorflow uses reverse mode automatic differentiation.Īs mentioned above, Automatic differentiation uses the chain rule so there are two possible ways to apply it: from inside to outside ( forward mode) and vice versa ( reverse mode). Automatic: repeatedly using the chain rule to break down the expression and use simple rules to obtain the result.Īs the author of the answer (Salvador Dali) in stackoverflow points out, symbolic and automatic differentiation look similar but they are different.Symbolic: manipulation of mathematical expressions (the one we learn in high school).Numerical: it uses the definition of the derivative (lim) to approximate the result.There are three types of differentiation:

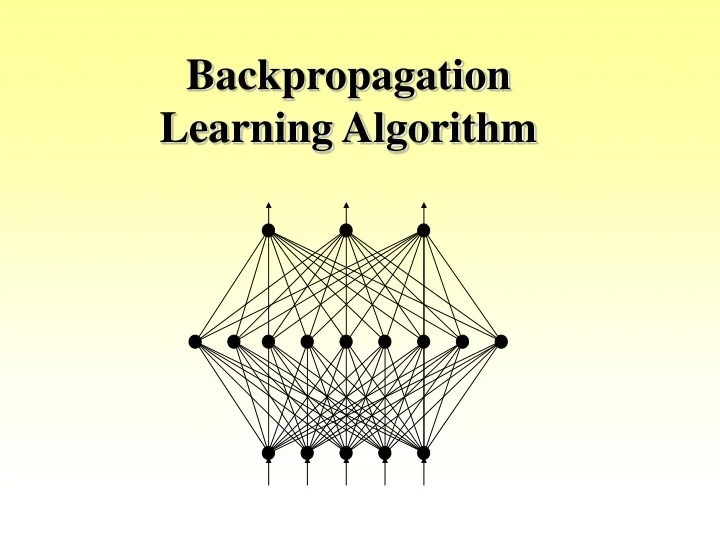

Most of the information presented has been collected from different posts from stackoverflow. It is often important to not only theoretically understand them but also being able to play around with them, and that is the goal of this post. Manipulating any type of neural network involves dealing with the backpropagation algorithm and thus it is key to understand concepts such as derivatives, chain rule, gradients, etc.

0 kommentar(er)

0 kommentar(er)